The data centre is arguably the iconic architectural typology of the information age. Like a weed, it can exist in extreme environments, reproduces quickly, and can overrun delicate ecosystems. To date, its adaptability has been enabled by the extreme standardisation of its component parts. With advances in artificial intelligence enhancing this adaptability, we are now witnessing the data centre as catalyst for a new urban habitat. This article is about a cybernetic wilderness – dominated by data centres – that is present and growing on the edges of Dublin city; a wilderness where machines commune with machines and the human is pushed to the periphery.

Fig. 1. Martello Tower, Shenick Island, Skerries, Co. Dublin.

Weeds, relics, and ghosts

In his seminal text on the erosion of biodiversity, The End of the Wild, Stephen M. Meyer categorises two species: the ‘Weedy’ and the ‘Relic’. [1] Weedy species are adaptive generalists; they flourish in various ecological settings, especially human-made environments. They can sustain themselves on a diverse range of food types; they expand into, then overrun, ecosystems through rapid reproduction. Weedy species, by their very nature, seek to territorialise and dominate ecosystems – with catastrophic effects. They quickly adapt to local conditions and, through force of numbers, overrun less adaptive species. Once overrun, that found system’s prior complex heterogeneity is replaced with the pervasive invader’s simple homogeneity. Examples of Weedy species are the jellyfish, black rats, pigeons, cockroaches, coyotes, cheatgrass, dandelions, European buckthorn, knapweed, and humans.

Relic species rarely survive the process of being territorialised. Slow to adapt, Relic species are specialists; they tend to be highly integrated into their particular ecosystem and any disruption to that system can profoundly affect the Relic. Relic species usually cannot easily coexist with humans. Examples are large mammals like elephants, the white rhino, panda; predators such as the big cats; and endemic species such as the Galápagos’ unique animal and plant life. As humans (and the Weedy species that follow in our wake) territorialise new ecosystems, the invaded system’s ability to naturally sustain the Relic species is weakened, disrupted, or destroyed. If territorialisation continues unchecked, the Relic loses its ecological value and is destined to become an ecological ornament.

Relic species face extinction unless protected with direct and continuous human intervention. Facing a more extreme scenario are the ‘Ghosts’; these species face inevitable extinction as they are not deemed worthy of human protection. I will use Meyer’s framework as a lens through which to view two categories of architecture. The first of which, failing to adapt to the world around it, was always destined to become a Relic. The second, open and adaptable, is a Weedy architecture that is actively proliferating across the Irish landscape.

A local genealogy of communications architecture

In Ireland, we have fifty Martello Towers. Built by the British Empire in the late eighteenth century and early nineteenth century as a line of coastal defence against an attack from France, this typology was cheap to construct and difficult to capture and destroy. The tower was an individual node that worked in concert as part of a skein of 153 towers strung across the Irish and English coastlines to form a mass-surveillance network. The Martello Tower typology takes its name from a sixteenth-century defensive tower at Mortella Point, Corsica, built as part of an eighty-five-strong network designed to warn of an impending Turkish attack. The original tower at Mortella Point was round in plan, contained a gun for defence and a beacon fire for signalling local forces. Intended to defend sites of strategic importance, they were typically built in populated areas.[2]

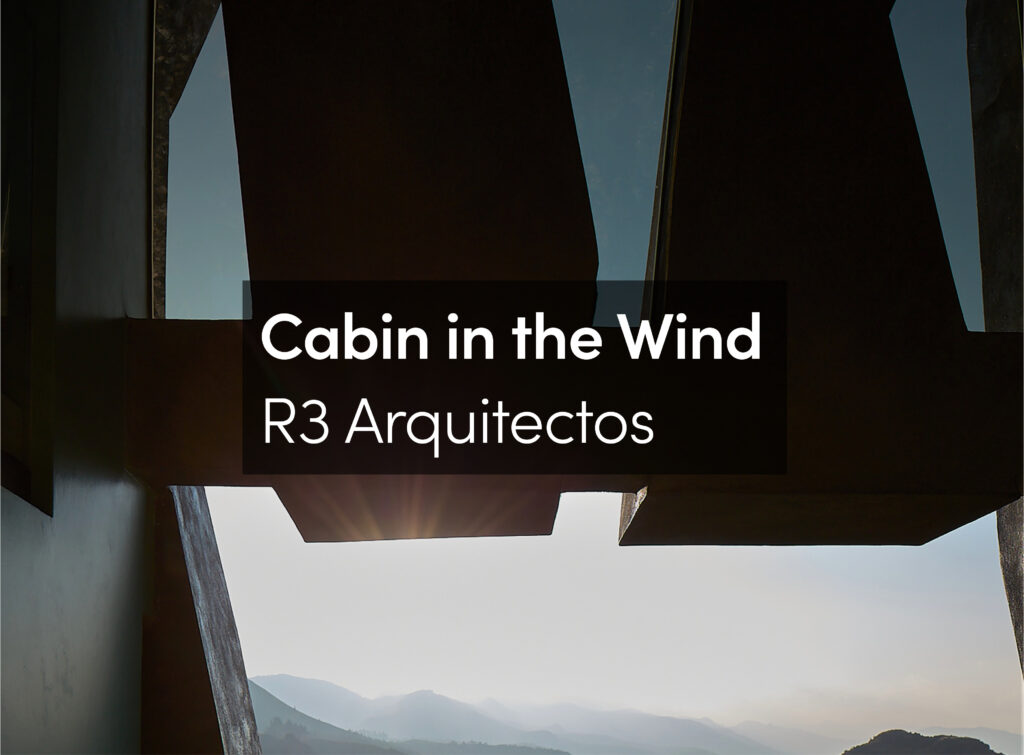

The Signal Tower, a typological cousin of the Martello Tower, was mass built by the British between 1801 and 1804. The Signal Towers were not designed to house artillery but were dedicated entirely to surveillance and communication. These towers were square in plan, had two stories and a flat roof, and were typically sited in remote coastal locations. The Signal Towers used a communication system called an optical telegraph, which required each tower to have at least two towers within sight in order to pass a message along the network. The system used flags on a clear day and signal fires in weather conditions such as low cloud or sea fog. There were eighty-one Signal Towers proposed in the 1804-1806 plans. Operating in concert, a message could circumnavigate the Irish coastline – some 1076km – the final destination being the Pigeon House Fort located at Dublin Port.[3] Both types of towers were obsolete on delivery; the French invasion never arrived. The Martello Towers and the Signal Towers failed to evolve and adapt. Some became Relics, repurposed as homes or museums; some became Ghosts, unwanted and neglected, ruins in the landscape. Both the Martello and Signal Towers only function when connected to a much larger network; a characteristic shared by their contemporary descendent – the data centre.

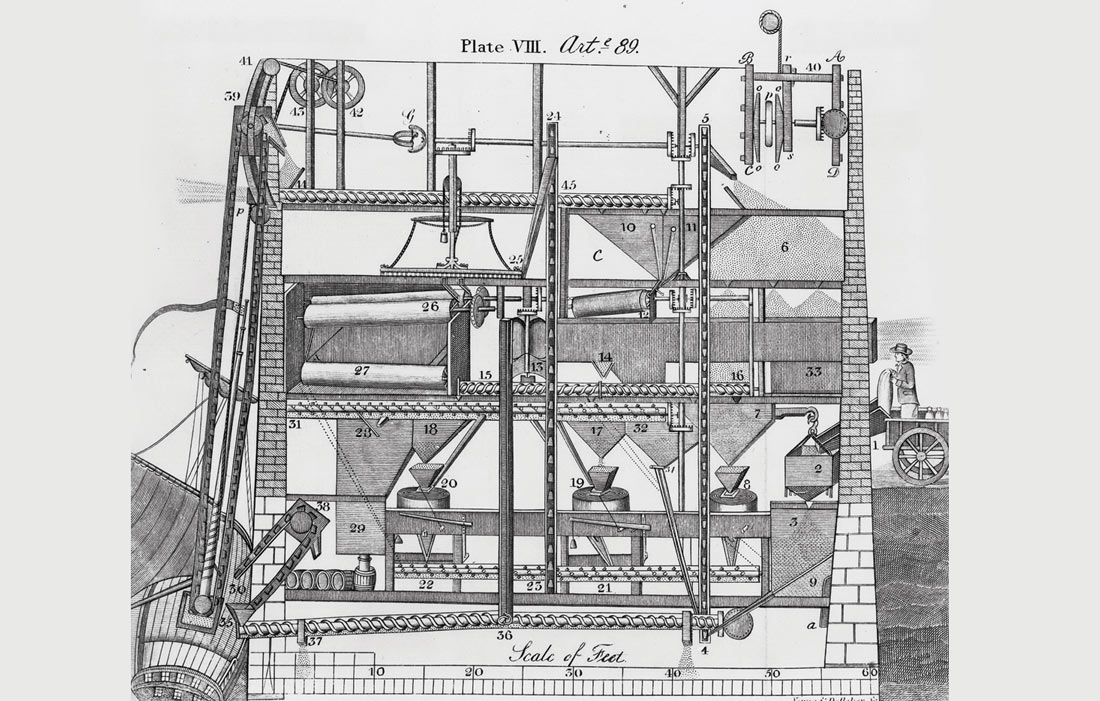

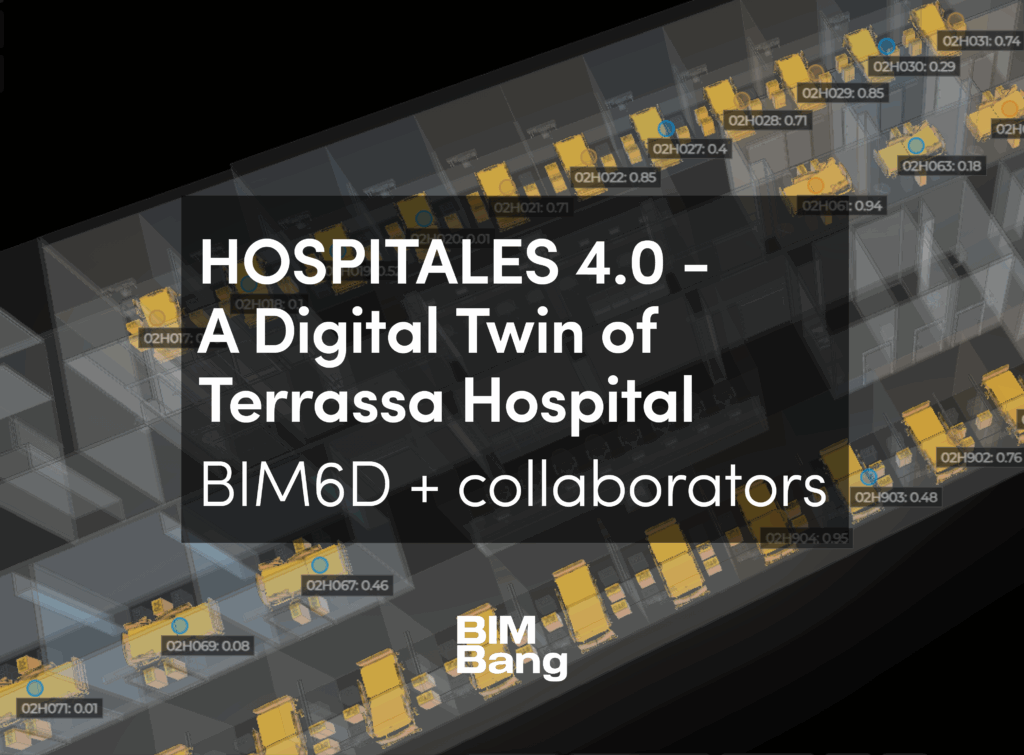

Fig. 2. Section of an automated mill by Oliver Evans.

The data centre

Simply put, a data centre is a container which organises a company’s ICT (Information and Communication Technology) equipment such as servers, switches, and storage facilities. The data centre manages the environmental conditions of the space (temperature, humidity, and dust) to ensure that the ICT systems operate reliably, safely, and efficiently. The following are the most common data centre types:

1._ A telecom data centre is one owned and operated by a large-scale telecommunications provider such as British Telecoms, Vodafone, AT&T, or Three. These facilities require very high connectivity and are generally responsible for driving content delivery, mobile services, and cloud services.

2._ The colocation data centre rents physical space, power, bandwidth, IP addresses, environmental control systems, and in-house security to companies who require data security and reliable connection to a network.

3._ The hyperscale data centre is the backbone of the cloud-computing business model. It is typically a facility owned and operated by one company; examples of these would be Amazon Web Services (AWS),

Google/Alphabet, Apple, Facebook, and Microsoft. The hyperscale data centre enables these companies to carry out an extraordinary quantity of daily transactions; four billion google searches, 500 million tweets, 254 million Alibaba orders, 350 million new Facebook photo uploads, 235 million QQ messages.[4]

As many organisations with medium and smaller data centres migrate their workloads into the cloud, the profile of the world’s data centres will move towards larger facilities.

Like the Signal Tower and the Martello Tower, the data centre is more than just a discrete and immutable architectural object; to function, each of the three requires connection to a broader network. A lone data centre is a liability, vulnerable to outages, cyberattacks, and other events that could be catastrophic to the data housed within. Many data centres, linked together, endlessly cycling data in duplicate and triplicate around a network, ensures data security. For example, Amazon Web Services divide the world into twenty-two regions; one such region is EU-West 1, known locally as Ireland. EU-West 1 is subdivided into three Availability Zones (AZ); each Availability Zone is made up of several data centres.[5] This vast assemblage of discrete data centres, linked together through virtualised infrastructures and a planetary-scale network of on-land and subsea cabling, enables global connectivity and data security on the Amazon Web Services platform.

Data centres are warehouse-scale computers, where, at all scales, absolute homogeneity of the parts is fundamental to success. The data centre’s physical architecture is essentially a kit of parts designed to be assembled on site, then disassembled when a renovation is required. A data centre’s IT systems are also composed of highly standardised components designed to be ‘hot-swapped’ when an upgrade is needed. The entire build process is exceptionally efficient. For example, a hyperscale data centre can be completed in as little as twelve months. Like a Weed, this efficiency, adaptability, and speed of delivery enables the data centre to proliferate widely, and thrive in even the most extreme of environmental conditions.

Fig. 3. Bray Head Signal Station, Valentia Island. Merlo Kelly, 2020.

Making a relic of the human

In 1785, American inventor Oliver Evans, in an attempt to eliminate the human worker from the flour-manufacturing process, created the first automated factory. Contemporary engineering calls this post-human production process ‘lights-out manufacturing’, and it is the holy grail of factory automation. The data centre, as the backend to cloud computing, is fundamental to the success of contemporary forms of lights-out automation, as it is to the automation involved in the physical-to-digital transformation of businesses such as banking. The data centre simultaneously territorializes and deterritorialises the physical spaces of human interactivity.

Built at Red Clay Creek, Delaware, Evans described his automatic flour mill as follows:

‘These five machines … perform every necessary movement of the grain, and meal, from one part of the mill to another, and from one machine to another, through all the various operations, from the time the grain is emptied from the wagoner’s bag … until completely manufactured into flour … without the aid of manual labour, excepting to set the different machines in motion.’[6]

Evans strived to make a fully automated facility where human hands never touch a product during the entire manufacturing process. In theory, a contemporary lights-out plant would operate twenty-four hours a day, seven days a week, with downtime only for routine maintenance or repair. FANUC, a Japanese robotics company, operate a factory that can perform in lights-out mode for up to six-hundred hours – humans required only for routine maintenance.

Lights-out automation is responsible for the data centre’s metamorphosis from an architecture designed around the human user to an urban-scale computer; optimised for machines, free to operate without consideration of human comfort. For example, Google’s data centre in St Ghislain, Belgium, allows its server rooms to run so hot on certain days in high summer that human operators cannot safely enter.[7] Other new types of data centre are designed to run entirely free of humans. This new generation of data centre is geographically independent and can be placed in remote, inhospitable locations where land is cheapest or where land speculation doesn’t yet exist, such as Microsoft’s Nautica, an experimental data centre currently sitting on the bottom of the North Sea.

Deterritorialisation refers to the intervention or appearance of components that destabilise an assemblage, either by causing it to change or perhaps even causing an entirely new assemblage to emerge. When planning a data centre, the real estate strategy usually targets sites with abundant and reliable power capacity, cheap land, fibre-optic connection, proximity to customers, other data centres, and preferably low local population. The philosopher Manuel DeLanda states: ‘Deterritorialising processes include any factor that decreases density, promotes geographical dispersion, or eliminates some rituals … [deterritorialisation] weakens links by making people less interdependent, by increasing geographical mobility.’[8] Since 2010, Amazon Web Services (AWS) has purchased three sites in the Dublin suburb of Tallaght: a former supermarket distribution centre, a former microelectronics factory, and a former biscuit factory. The biscuit factory and the distribution centre employed, on average, two-hundred workers at each site; the microelectronics factory employed an average of 340 workers. The average data centre employs between five to thirty people, and with advances in automation, this average will drop further in the coming years. This low worker-density number can be attractive to a local county council because they receive substantial rates without the need for serious investment in housing, schools, and transport to support the data centre. Data centres generate tax revenues but do not employ many people; they are unlikely to change local residents’ economic fortunes. Graham Pickren noted, if the data centre is the ‘factory of the 21st century, whither the working class?’[9]

As the data centre’s physical form territorialises our industrial heartlands, its computational processes deterritorialise the high street. In March 2021, Bank of Ireland announced it was closing 103 bank branches on the island of Ireland. Accelerated by nationwide COVID-19 lockdowns, the bank is now transitioning much of its operations from physical to digital banking – a transition not possible without the massive computing power made available through their data centres. This is a rapid erosion of the physical spaces of banking that further excludes the unconnected.

An invasive species

A data centre radically transforms the quality of energy it consumes; it takes in enormous quantities of high-grade electricity and expels equally large volumes of heat. Too much heat causes server failure, making the design and manufacture of thermal management systems one of the most challenging aspects of data centre design. In recent years, the Irish data centre industry’s energy consumption has come into sharp critical focus. One proposed Amazon facility is forecast to consume four percent of Ireland’s total electricity demand. Collectively, the data centre industry is expected to consume thirty-one percent of Ireland’s total electricity output by 2027.[10]

The increasing visibility of this vast energy consumption, and its carbon footprint, is highly problematic for the industry. The immense quantities of heat exhausted further underscore the dirty materiality of data processing. Collectively, the electricity consumed and the heat exhausted destabilize the carefully crafted utopian fantasy that the cloud is an ephemeral megastructure that treads lightly on the earth.

On 15 December 2020, Codema, Dublin’s energy agency, announced the awarding of a contract to develop Ireland’s first large-scale district heating system.[11] This contract is the first of its type in Ireland and the UK to recycle data centre waste heat. The system, titled ‘Heat Works’, is located in the south Dublin suburb of Tallaght. Heat Works will connect public, private, and residential space with the exhausted heat from a recently completed Amazon Web Services data centre. The scheme is estimated to reduce local carbon emissions by 1500 tonnes per year. The data centre now performs dual roles at the centre of a new kind of ecology – private data processing plant and public heating utility. Instead of retreating from the public eye, this Weedy species is further entangling itself into the physical fabric of the urban realm.

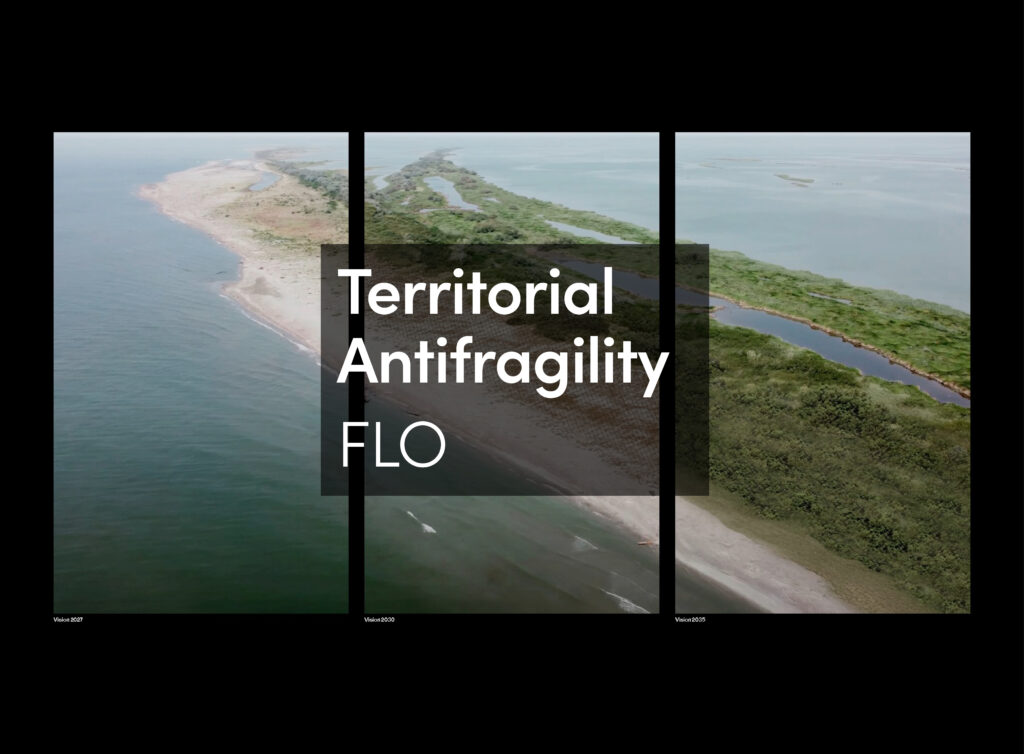

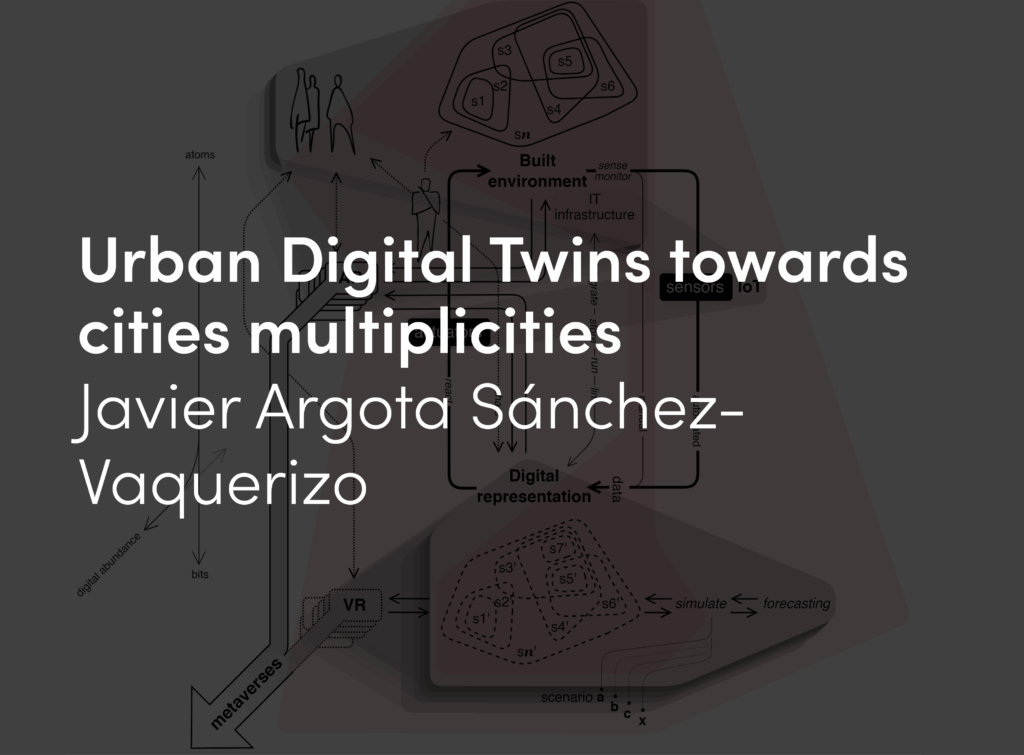

Fig. 4. Aerial view of Google and Microsoft data centres, Grangecastle Business Park, Co. Dublin.

A confident system

In 2016, DeepMind, a subset of Google/Alphabet, announced it was training several artificial neural networks (ANNs) with deep reinforcement learning. Its goal was to regulate the management of Google’s data centre cooling operations. The AI yielded impressive results, saving, on average, thirty percent on energy usage per month. In 2018, Google announced it had effectively handed over control to the algorithm, which autonomously manages the cooling systems at several data centres. Deep reinforcement learning teaches a computational system of what to do – how to map situations to actions – to maximise a numerical reward signal. The learner is not told which actions to take but instead must discover which actions yield the most reward through trial and error. In 2016, an AI called Alpha Go trained alongside a human supervisor to play the ancient Chinese board game Go; it subsequently defeated the Grandmaster Li Sedol in five games to one. Alpha Go trained by studying millions of historical games played by human experts. According to Sedol, Alpha Go did not play conservatively and mechanically like one would expect a computer to play; Sedol described its playing style as confident, aggressive, and creative. Alpha Go uses experience replay, inspired by what happens in the human brains’ hippocampus during sleep. Experience replay is essentially a system that stores a pool of past experiences that can be drawn on during rapid learning sessions. Designers reported a severe deterioration in performance when experience replay was disabled. Alpha Zero – the next-generation to Alpha Go – was tasked to learn from scratch, without human guidance. Zero built its knowledge base by playing Go against itself millions of times. Zero started poorly, gradually improving by identifying successful moves and patterns. When pitted against each other, Alpha Zero defeated Alpha Go one hundred games to nil.

Learning from scratch produced a far more robust AI, meaning that Alpha Zero’s predecessor, Alpha Go, was weakened by training it on a pool of human experience.[12] ‘By not using human data – by not using human expertise in any fashion – we’ve actually removed the constraints of human knowledge’, said AlphaGo Zero’s lead programmer, David Silver.[13]

Designing an AI to play the board game Go is one kind of challenge; developing an AI to manage complex industrial-scale processes is quite another. Suppose a data centre’s thermal management consisted of two dials: one for a fan and one for aircon. In that case, an engineering team could potentially plot out the totality of that system’s management parameters. In actuality, a data centre thermal management system is a large-scale technical ensemble with perhaps billions of individual setpoint options. Deepmind’s data centre AI was given a task to reduce costs on data centre energy consumption. To do this, the AI had to work its way through all the setpoint possibilities. The AI is given control to develop and implement its own preferred operational settings; as it gains more experience at a given task, it improves. Weather plays an integral role in the cooling operations of a data centre, and Deepmind’s AI is trained to understand the unique complexity of a centre’s location. For example, at a Google data centre in the American Midwest, during a Tornado watch, the AI altered the plant’s settings in a way the human operators found counterintuitive, the system’s intervention initially baffling engineers. On analysis, the AI took advantage of regional weather conditions. A severe thunderstorm involves a significant drop in atmospheric pressure coupled with dramatic temperature and humidity fluctuations; the AI used this sudden localised drop in atmospheric temperature to temporarily reduce the intensity of its cooling operations to make energy savings.[14] For now, data centres are the primary testing sites for Deepmind’s technology, but they plan to mass-market this system to other sectors of industry, and one could assume its deployment in office and residential space is to follow.

Data centres, such as the St Ghislain example, may soon be capable of performing lights out. With the example of the AWS/Tallaght district heating partnership, data centres are beginning to perform a strategic role as an urban utility. At Grangecastle Business Park (GBP), an industrial park located on the edge of Dublin city, one may find the outlines of a more complex kind of ecology. GBP is home to a Google data centre, a Microsoft data centre,[15] and many other large-scale manufacturers. Codema ranks GBP as the next best site in Dublin to install a district heating system.[16]

Furthermore, a company called Grange Energy Centre Ltd has sought permission to build a combined heat and gas power plant, one designed to both produce power and provide low-carbon heat and steam for servicing the park’s heating and cooling needs.[17] The potential efficiencies of these reciprocal smart energy networks are seen as drivers in combatting climate change. They are attracting significant investment, such as the EU-funded projects STORM[18] and TEMPO[19], both of which are experimental smart thermal networks built with cutting-edge smart controllers operated by AI and designed explicitly for district heating and cooling networks. Moreover, Deepmind actively researches ways to enable cooperation between their AI and non-Deepmind AI. Once this breakthrough occurs, one can imagine a new form of machinic ecosystem connecting the data centre, the power station, and the smart heating network.

A cybernetic-wilderness

The data centre is a Weedy species. The standardisation of its form means it can thrive and flourish in most ecosystems; its overall performance is enhanced when the human is removed. When its existence was challenged, it doubled down and territorialised the very ancient means in which humans derive comfort – our systems of heating.

A wilderness is described in the opening paragraphs of the US Wilderness Act of 1964 as ‘an area where the earth and its community of life are untrammelled by man, where man himself is a visitor who does not remain’. Are we witnessing the outlines of a new form of cybernetic wilderness, an ecosystem of machine-to-machine decision making opaque to human perception, with the data centre at its heart? In this wilderness, the human would be a visitor, required only for irregular maintenance operations; in this new kind of wilderness, the Weeds make a Relic of the worker.