This essay is the first in a series, at the intersection of architecture, neuroscience and technology. Within such a design-oriented context, the first question that stands out is: Why neuroscience? This unfolds into broader territories, posing questions such as: Why is that field of scientific research relevant to a discipline such as architecture?

In the interest of addressing those questions, two objects will be studied. On the one hand, responsive architecture is the object of examination in this piece. On the other, computational neuroscience is the branch of neuroscience that will be looked at. Eventually, both territories intersect in technology, mainly computation. In the following lines, I first attempt to skim through a history of neuroscience and then demonstrate the main architectural problematic and the relevance of neuroscience in that context.

“The biology of man had evolved to give modern man a whole new set of nerves” Loos, 1921

Dealing with the brain as a special object dates back to the pharaohs; they had a term for it, since they were able to diagnose brain damage back in the 1700s BC. However, they scooped brains out of the dead before mummification, since they considered the heart to be the center of intelligence. Throughout recent history, working with the brain, and how we understand it, has been characterized by three methods: biological approaches, chemical approaches, and, finally, electrical approaches, which unlock a whole new world of comprehending and interacting with the brain.

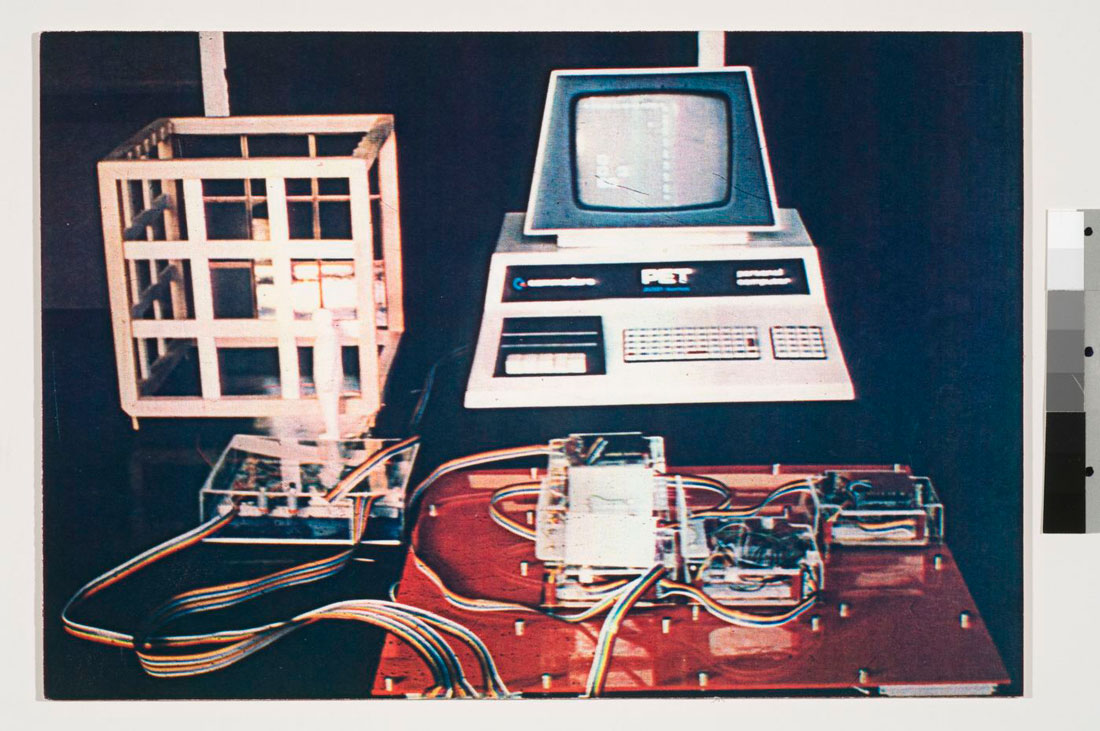

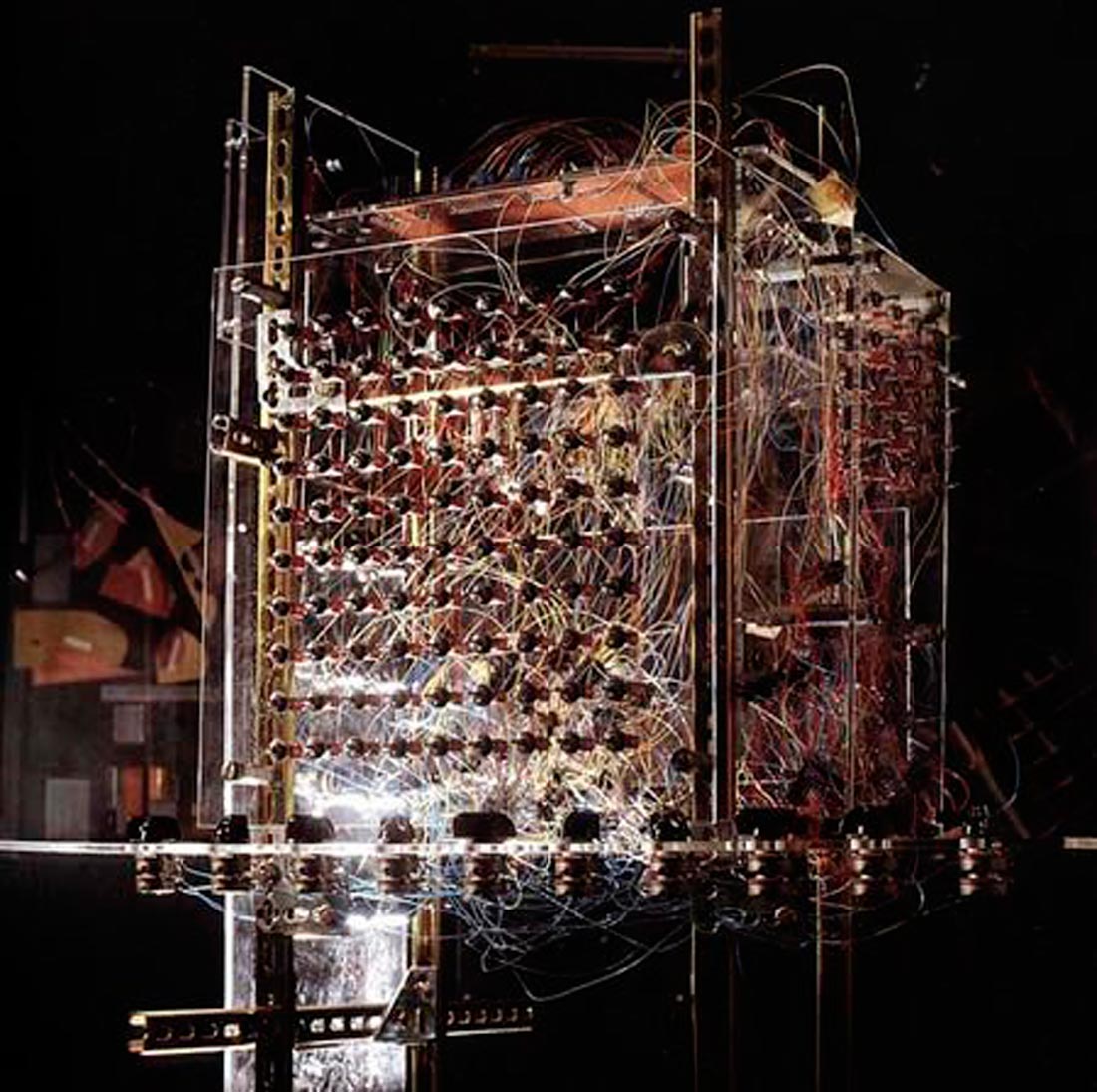

The Generator © Canadian Center for Architecture

To provide some context, neuroscience, in the modern use of the term, emerged around the 1950s due to breakthroughs in electrophysiology, computation and molecular biology. Neuroscience emerged not only from the aforementioned fields but also from advancements in nephrology, psychology, neurology, psychiatry, oncology and medicine.

Like many disciplines that were developed and funded in the post-WWII period (notably physics and nuclear medicine), neuroscience as a modern discipline grew through military funding for educational institutes, like MIT, and military institutes like the WRAIR. Neuroscience grew from these projects, as researchers were urged to study brain anatomy, physiology, psychology and psychiatry simultaneously. This multidisciplinary ethic took neuroscience outside the clinical sphere; an aspect which blossomed, following on promising beginnings, as the discipline progressed.

Like numerous other technological research disciplines, neuroscience – and more specifically neurotechnology – was subject to institutional authority, an issue that will be discussed in an upcoming essay. Fast forwarding through recent history, neurotechnology has largely escaped institutional authority, and has become available to a broad number of individual enthusiasts, with applications mainly in neuroimaging and brain computer interfaces.

Concurrently, architecture (through collaborations like the iconic duos Price-Pask and Negroponte-Minsky, among others) began integrating control systems, robotics, kinetic systems, computation, artificial intelligence, machine vision, cognitive science, etc. – in other words, cybernetics. These collaborations achieved incongruent levels of complexity between theorizing and prototyping. After working closely with architects, Pask claimed that they are, first and foremost, system designers but they lack an underpinning and unifying theory, which he saw was cybernetics. Whether the claim is fully legitimate or not, cybernetics’ relevance to architecture has proved to be immense. Not only that, it also had a profound impact on an established permanent urgency: an ongoing disciplinary crisis represented by architecture’s need to augment, reinvent, and reproduce itself in an ever-expanding manner.

One of the main scientific roots of cybernetics is neurobiology. In the early 1960s, following a blunt answer to the question that lay at the foundations of first-generation cybernetics, “What is a brain?”, Ashby noted in 1961 that it was up to second-generation cybernetics to answer the question “What is mind?”

The brain interested scientists in the cybernetics community deeply, as evidenced by four major books that addressed this subject: Ross Ashby’s Design for a Brain (1952), Grey Walter’s The Living Brain (1953), John von Neumann’s The Computer and the Brain (1958) and Stafford Beer’s Brain of the Firm (1972). All four embodied a foundational interest in making machines that could act like brains.

Architecture has never taken the brain as part of its scheme. The main body of architecture work has gone from enlightenment and humanism to the sensational and animate project, arriving at empathetic design and employing Gestalt theory, and, finally, docking at the phenomenological project. Central to most of these projects is an idealization of the human being, and a very blurry kind of relation, as these projects primarily characterize the architect’s brain per se. Nevertheless, the best of these works imported a cultural discourse that was very relevant at its time. As for today’s production, architects have contributed, at best, kinetic gadgets, efficient environmental systems, audiovisual effects, fabrication techniques, etc. failing to fundamentally and reflexively question through the increasingly hot topics with external and perhaps existential relevance, such as robotics, artificial intelligence, and neuroscience.

The similarities between robotic and architectural systems could be reasonably attributed to architecture’s role as the first machine – i.e., the first assemblage of tools for mediating material and cognitive effects. The very first attempts on various scales, from architectural to urban scales, on the part of projects such as The Fun Palace (Price) and URBAN5 (Friedmann), put forth a diverse set of questions. The first demonstrates an autonomous built environment, which could be thought of as a probable answer to the question: “What if the building had a brain?” Evidently, that required an interface, an aspect Friedmann clinically demonstrates in his project. Nevertheless, with both projects’ participatory nature, the element of the user is celebrated, as the project revolves mainly around the inhabitants.

Straying away from the specificity of these two projects, yet sustaining the main problematic they pose, it is at the core of this discourse to examine state-of-the-art technologies and formulate critical self-reflective questions touching on architecture. In this architectural singularity, the primary purpose for creating space is no longer to sustain the biological constitution of human bodies but to prioritize a cognitive experience as a synthesis of physical and virtual spatial constructs. At a time when we no longer exclusively rely on materiality to construct space, architecture must trade its fundamental mainstays, such as stasis and functional determinism, for cultural survival. It must give up conclusive creative agency, control and supervision over closed-loop design processes and instead embrace open technological frameworks as the dominant social platforms through which architecture can be shaped collectively. By many, this is the new problem of autonomy for architecture: the autonomy of the design process from the architect’s design schemes.

Ever since the Renaissance, as the ideas of authorship and space became key to what architecture is, indexical systems have pushed the architect a step back from the physical building itself, without eliminating him/her as the author of the project. We have come to the point where builders are machines, notational systems are digital, and the architect has moved not a step, but a thousand miles, away from whatever is not architecturally fundamental. Stretching this idea to its extreme, we can now envision systems that would make our brains into a notational system for machines to interact with.

But is the architect still the author? What is the role of the architect in such a case? It goes precisely along the lines of the same notational system invented before; architects used to make buildings, then they progressed to designing buildings, and to designing processes, etc., until the present moment where it might be enough to just have an idea.

Neurotechnology provides for notions such as the possibility of the user manipulating spatial statuses and, in parallel, enhances buildings with an indexical system of response. Accepting the ever-changing nature of space triggered by users, this neurotechnology-architecture marriage puts forward the idea of enabling the building with a processing unit – a brain – upon which the bidirectional communication of architect-to-building and user-to-building, and vice versa, can take place.

From the book An Evolutionary Architecture by John Frazer

Such artificially intelligent architecture cannot be characterized by its formal imitation of natural processes and its practical response to their existence; rather, it should be defined by the complexity of its own behaviour as it autonomously navigates through a singular world of biology and data.

By linking physiological processes and mechanized assemblages directly, architecture becomes a robotic system that has unique behavioural traits and is tethered to humans. Here, technology rather than the body becomes the interface that negotiates between human cognition and a synthetic formal order of space: a trans-architecture. Architecture becomes the new body.

If trans-architecture is a spatial construct that negotiates between human cognition and the environment through technological frameworks, the proposed architecture is the absence of this negotiation. The proposed architecture system would no longer prioritize the human experience as its raison d’être. Rather, it would be driven by its own autonomy, where material and virtual constructs would hold equal footing in its proliferation: a human becomes an actor rather than the epicenter.

Such architectures are concerned with their own building and unbuilding – the production, destruction, evolution, and recomposition of transient space. As proposed in media-art theory, these formalizations are inherently mobile, traveling between the digital and physical worlds, defined by cumulative ecosystems of robotics, media interfaces, computational logics and data streams.

Urban environments are evidently larger scale constructs and are, at the core, composed of organized works of architecture in an urban medium. Urban environments, too, thrive within a heterogenous sphere of the physical and the virtual, and, thus, they have many applications at that larger scale. Whereas, architecturally, the discourse might impose a certain autonomous drag, urbanism provides a more pragmatic method, which permits a certain degree of simplification for the purpose of urban-scale prototyping.

Given all the information, networks, layers and materiality of the city, two extreme insights surface. On the one hand, and due to the augment in robotic and computational infiltration of the urban fabric, cityscapes have been occupied by machines more than ever, and have thus acquired a machinal way of being. On the other hand, a more humanistic approach proposes humans as a priority and an exaggerated importance that seems destructive. Large-scale computation-infiltrated environments, although primarily designed and occupied by humans, are progressively becoming more and more conquered by machines and machine vision, as they make their way in an escalating manner. At its best, this interaction is mainly unidirectional as systems gather data, but it is never data about what actually makes a difference – i.e. the brain.

As futuristic as that sounds, plugging in the human brain to a cityscape of computation allows for a more extreme machine landscape, meanwhile integrating our most complex lump of intelligence as part of what other agents act upon. The brain as a read-only open object has been overcome, as writing to the brain has become feasible; in turn, that stretches the above into a brutally extreme hypothesis.